Introduction

Over the past decades, software engineering has witnessed an evolution from human-readable design patterns to dynamic microservices and API integrations. Today, a new innovation—the Model Context Protocol (MCP)—is emerging as a game-changer. Often described as the "USB-C port for AI applications," MCP standardizes how context is provided to large language models (LLMs), bridging traditional systems with cutting-edge AI-driven workflows.

"MCP is not just a protocol—it's the foundation for a new era of AI-driven software architecture where context becomes the currency of intelligent automation."

Foundations: Software Design Patterns, Microservices, and API Integration

Software Design Patterns

Design patterns—creational, structural, and behavioral—have long ensured that applications are scalable, maintainable, and adaptable. Whether using the Singleton to manage resources or employing Observers for event handling, these patterns streamline collaboration and maintenance.

Microservices Architecture

Modern architectures increasingly rely on microservices. These independently deployable services embody principles like decoupling and domain-driven design, with APIs acting as the glue that binds diverse system components.

API & Integration Patterns

From synchronous request-response interactions to asynchronous event-driven designs and webhooks, API integration patterns underpin today’s distributed systems.

Introducing the Model Context Protocol (MCP)

MCP is an open standard designed to bridge applications with LLMs by standardizing contextual data exchange. Much like a universal connector, MCP streamlines how legacy systems share rich, relevant context with AI models—increasing accuracy, security, and scalability.

Implementation Concepts and Architectural Placement of MCP

-

Centralized Orchestration Layer

In this approach, dedicated MCP servers sit atop existing APIs and microservices. This offers unified observability and easier policy enforcement but introduces potential single points of failure.

-

Embedded or Layered Integration

Alternatively, MCP functionalities may be built into existing service layers. This reduces latency and deepens integration, though it can complicate consistency and maintenance.

-

Hybrid Approaches

A hybrid model leverages both centralized oversight and local control, adapting well to complex environments that demand both global governance and rapid, localized responses.

MCP's Role in Enhancing AI and LLM Workflows

By standardizing context injection, MCP empowers LLMs to generate more precise, actionable responses—paving the way for automated decision-making, dynamic API integrations, and intelligent orchestration across microservices.

MCP transforms how AI systems understand and interact with enterprise data, enabling contextually aware responses that can drive real business outcomes through automated decision-making and intelligent process orchestration.

Agentic Systems with SmolAgents

An exciting approach to building agentic systems is through smolagents—lightweight frameworks that empower code agents to generate and execute code dynamically in sandboxed environments. Smolagents facilitate:

-

Dynamic Workflow Execution

On-demand code generation to handle multi-step tasks with real-time adaptation to changing requirements.

-

Secure Execution

Running in sandbox-like conditions to minimize risks when interacting with external services and sensitive data.

-

Enhanced Autonomy

Equipping LLMs with the ability to autonomously decide and execute complex sequences without constant human intervention.

Smolagents represent a paradigm shift in agentic systems, enabling AI to not just process information but actively generate and execute code in real-time, bridging the gap between decision-making and implementation.

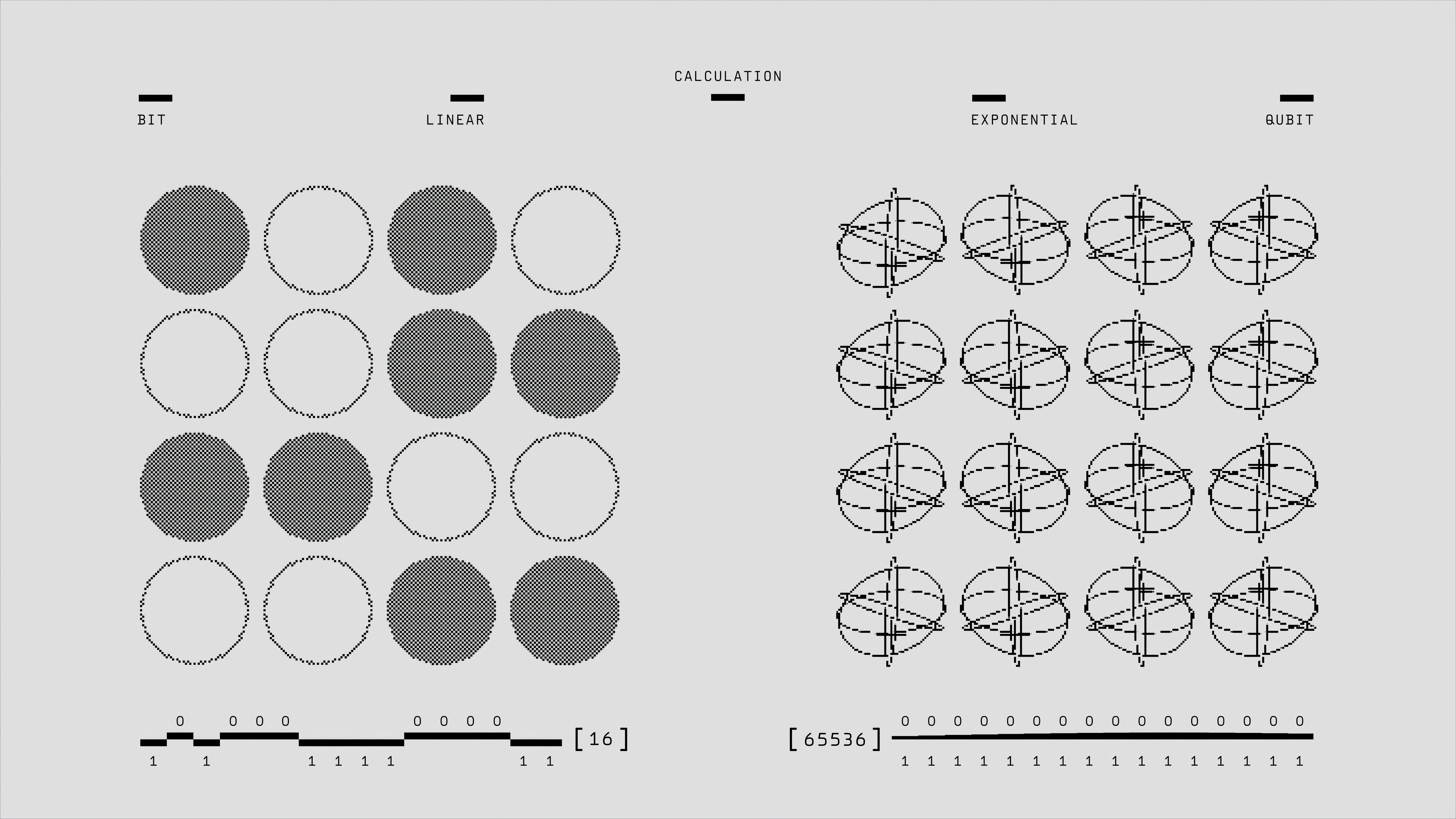

Token Usage and Response Precision

When using private or proprietary AI services, every interaction consumes tokens—units representing both input and output. Mindful management of token usage is critical for:

-

Cost Efficiency

Avoiding unnecessary token consumption to keep expenses in check while maintaining system performance.

-

Performance Optimization

Reducing latency by ensuring LLMs process only the required context, improving response times.

-

User Trust

Ensuring predictability and precision in responses through optimized context delivery.

Leveraging techniques such as GraphQL queries within MCP frameworks can help tailor responses to only what is needed—optimizing token usage and thereby overall system performance.

MCP vs. A2A: Bridging Agent-to-Tool and Agent-to-Agent Communication

While both MCP and Agent-to-Agent (A2A) protocols fall under the agentic systems umbrella, they serve different purposes:

-

MCP: Agent-to-Tool Interface

Acts as a universal interface between agents and external tools, databases, or APIs—standardizing the way rich context is delivered to AI models.

-

A2A: Agent-to-Agent Communication

Focuses on direct agent-to-agent communication, enabling specialized agents to share information and coordinate tasks within an ecosystem.

In practice, these approaches are complementary. While MCP extends a model's reach externally, A2A ensures internal dialogue among agents—forming a cohesive, intelligent network.

"The future of agentic systems lies not in choosing between MCP and A2A, but in orchestrating them together to create truly intelligent, interconnected AI ecosystems."

Real-World Use Cases and Practical Scenarios

Consider a large enterprise deploying a centralized MCP layer for unified context management across microservices, or a latency-sensitive application that embeds MCP functionality within its service clusters. In even more complex cases, a hybrid approach can blend centralized oversight with localized control to meet diverse operational needs.

-

Enterprise Integration

Financial institutions using MCP to provide AI agents with real-time access to customer data, transaction histories, and compliance databases for personalized service delivery.

-

Healthcare Systems

Medical AI assistants leveraging MCP to access patient records, treatment protocols, and research databases while maintaining strict privacy controls.

-

E-commerce Platforms

AI-powered recommendation engines using MCP to integrate inventory data, customer preferences, and market trends for dynamic product suggestions.

Challenges, Considerations, and Future Outlook

While the adoption of MCP and its allied innovations promises enhanced integration and AI workflow efficiency, it also brings challenges such as integration complexity, security risks, and increased operational overhead. A phased rollout, robust automation, and continuous monitoring are essential to overcome these obstacles.

-

Integration Complexity

Managing the transition from legacy systems to MCP-enabled architectures requires careful planning and extensive testing.

-

Security Considerations

Ensuring secure context sharing while maintaining data privacy and compliance with regulatory requirements.

-

Operational Overhead

Managing increased system complexity and the need for specialized skills to maintain MCP-enabled infrastructure.

Looking to the future, the evolution of MCP—coupled with innovations in smolagents and A2A protocols—will usher in a more interconnected, agile, and intelligent software ecosystem.

Conclusion

By integrating traditional software design patterns with modern microservices architectures and pioneering protocols such as MCP, organizations can drive unprecedented innovation in AI and LLM applications. With the additional insights into smolagents, careful token management, and a clear differentiation from A2A communication strategies, technology leaders have a bold roadmap ahead.

Embracing these advanced, yet experimental, approaches can help shape an agile future where AI systems are both powerful and precisely integrated into business processes. The future of software is not just about code—it's about creating intelligent, interconnected systems that redefine innovation.

"The convergence of MCP, smolagents, and intelligent token management is creating a new paradigm where AI systems become true partners in business transformation, not just tools."

Are you ready to harness MCP and revolutionize your software architecture?